Bio

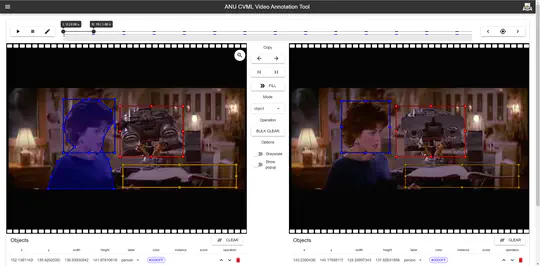

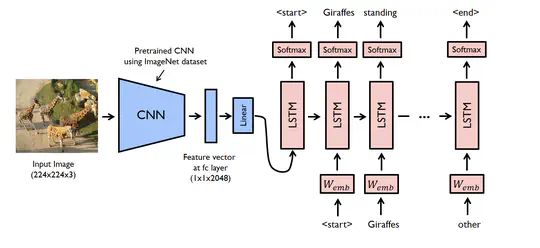

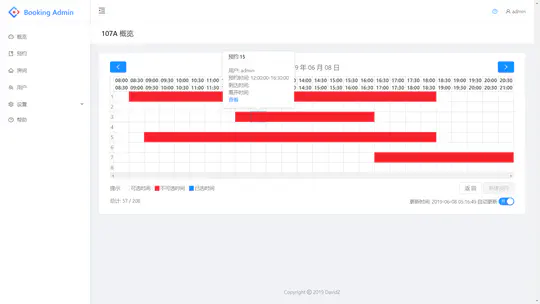

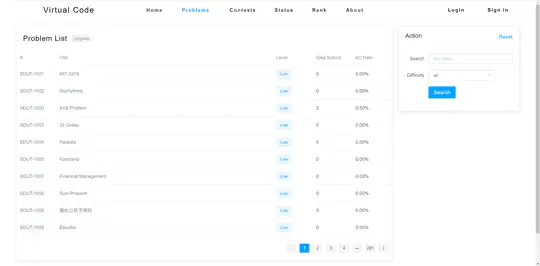

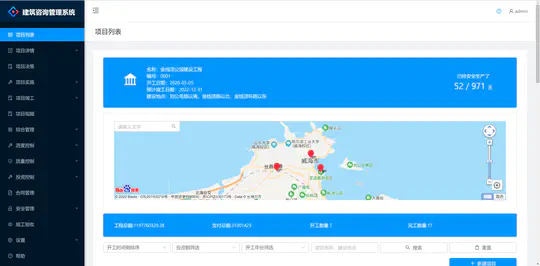

He is currently a third-year Ph.D. student in the Research School of Computer Science, The Australian National University. On the one hand, he is a passionate starter in academic research and interested in many deep learning topics, particularly video understanding, human action recognition. On the other hand, he is an active full-stack web developer. He is currently doing a research project supervised by Professor Stephen Gould, Dr. Yizhak Ben-Shabat, Dr. Anoop Cherian, and Dr. Cristian Rodriguez. Before that, in 2021, he received his bachelor’s degree in Advanced Computing (honours) and Computer Science and Technology from The Australian National University and Shandong University, Weihai respectively.

- Deep Learning

- Computer Vision

- Web Development

Ph.D. of Computer Science, 2022 - Present

The Australian National University

Bachelor of Advanced Computing (Honours), 2019 - 2021

The Australian National University

Bachelor of Computer Science and Technology, 2017 - 2019

Shandong University, Weihai

Selected Publications

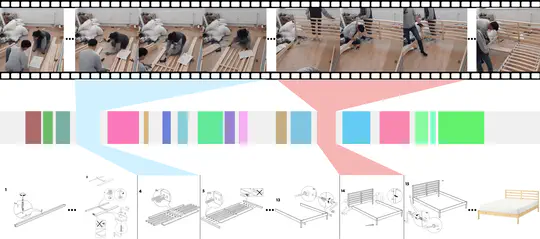

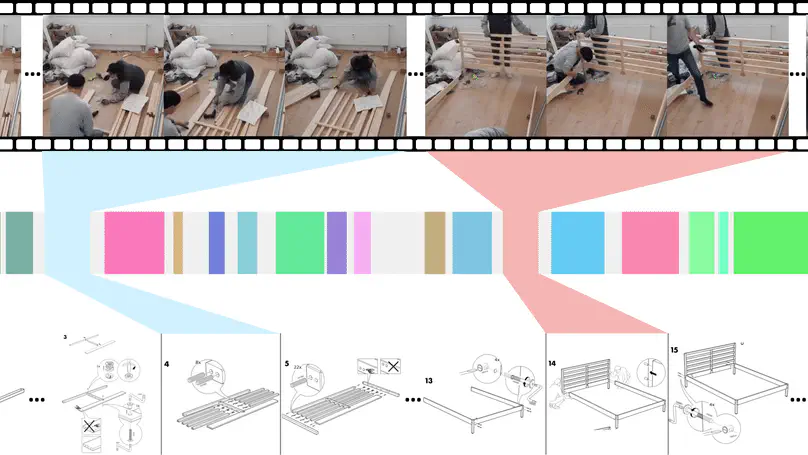

This paper introduces a supervised contrastive learning approach that learns to align videos with the subtle details of assembly diagrams, guided by a set of novel losses. To study this problem and evaluate the effectiveness of their method, they introduce a new dataset: IAW—for Ikea assembly in the wild—consisting of 183 hours of videos from diverse furniture assembly collections and nearly 8,300 illustrations from their associated instruction manuals and annotated for their ground truth alignments. They define two tasks on this dataset: First, nearest neighbor retrieval between video segments and illustrations, and, second, alignment of instruction steps and the segments for each video. Extensive experiments on IAW demonstrate superior performance of their approach against alternatives. (Generated by New Bing).

Publications

Since 2022

Projects

Since 2017

Experience

Since 2017

Accomplishments

Since 2017