Bio

He is currently a final-year Ph.D. student in the Research School of Computing, The Australian National University. On the one hand, he is interested in many deep learning topics, particularly video understanding and generation. On the other hand, he is an active full-stack web developer. He is currently doing a research project supervised by Professor Stephen Gould, Dr. Anoop Cherian, Dr. Yizhak Ben-Shabat, and Dr. Cristian Rodriguez. Before that, in 2021, he received his bachelor’s degree in Advanced Computing (honours) and Computer Science and Technology from The Australian National University and Shandong University respectively.

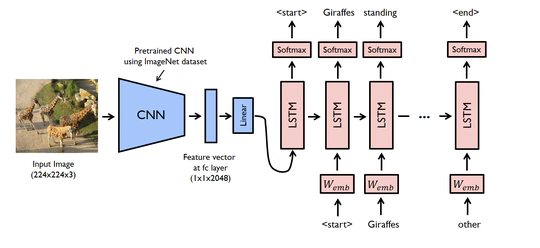

- Video Understanding & Generation

- Agentic & Embodied AI

- Web Development

Ph.D. of Computer Science, 2022 - 2026

The Australian National University

Bachelor of Advanced Computing (Honours), 2019 - 2021

The Australian National University

Bachelor of Computer Science and Technology, 2017 - 2019

Shandong University

Selected Publications

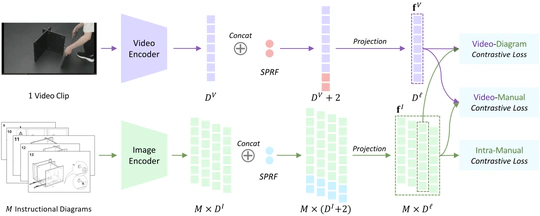

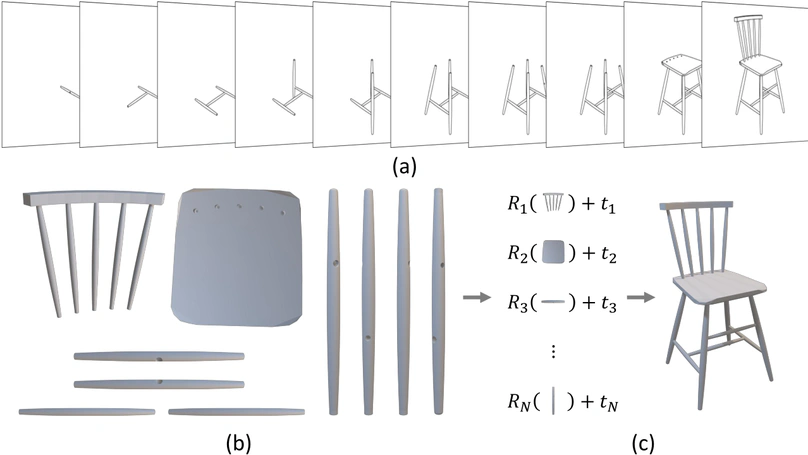

We introduce Manual-PA, a transformer-based framework that leverages diagrammatic assembly manuals to guide both the selection and 6D pose estimation of furniture parts, enabling efficient and realistic 3D assembly by aligning parts with instructional illustrations.

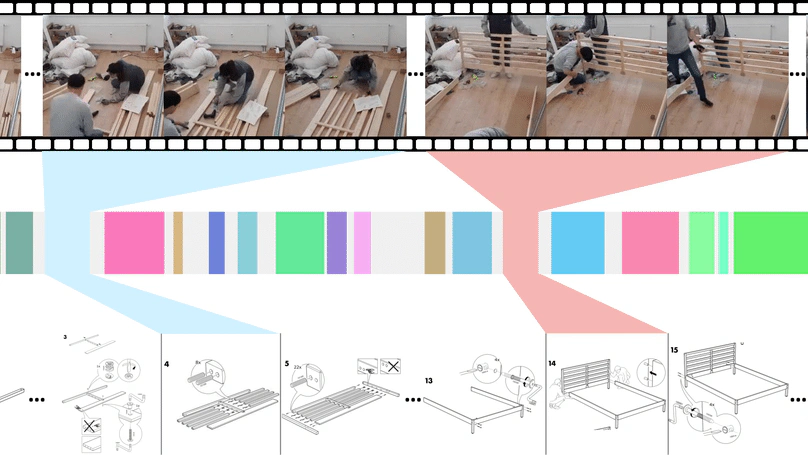

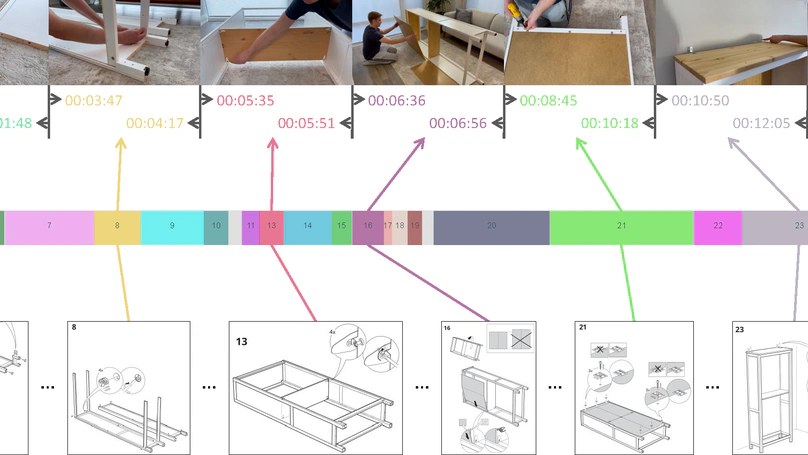

We introduce a new approach to simultaneously localize a sequence of instructional diagrams in videos by modeling their mutual relationships and temporal order, rather than handling each step independently.

Publications

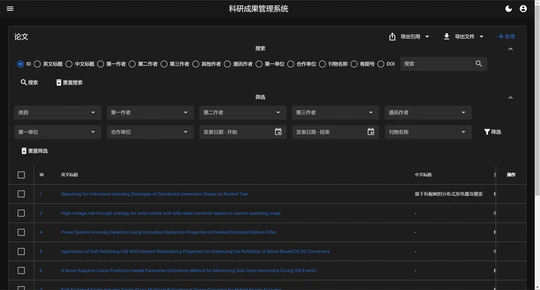

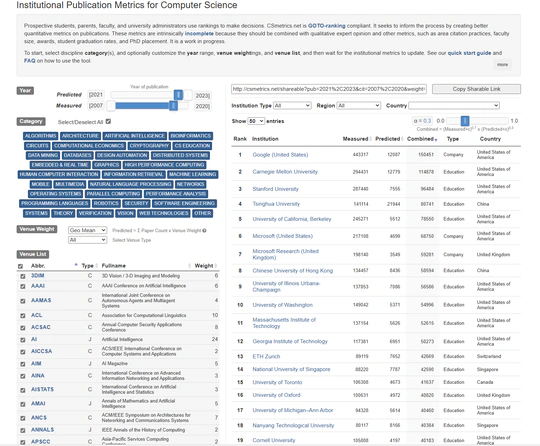

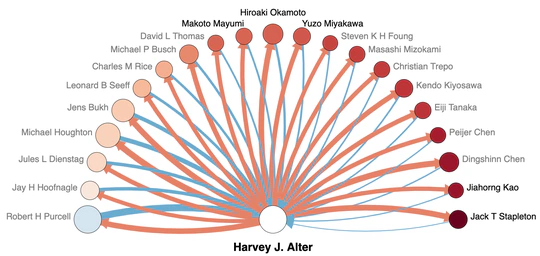

Projects